We usually observe locking problems in applications that use monolithically increase identifiers for their application components, where multiple sessions are trying to obtain the next identifier, in all this situations it´s highly recommendable to use "sequences" from the database side, that will allow less locking problems within the databases:

With locking problems:

update serials set lastvalue=lastvalue+1 where type='invoice' returning lastvalue into vlastval;

Insert into invoices (id, …) values (vlastval, …);

For a single session, the "update serials" command will create an exclusive row lock in the row with "type='invoice'". When you have multiple sessions trying to obtain the next identifier, each session that obtains the lock will block all other sessions trying to obtain the same lock, so a locking contention appears at the database server level. Your database server "precious" time is wasting only waiting for locks to be released …, just not doing anything good for the business.

Without locking problems:

Insert into invoices (id, …) values (nextval('seq_invoice_serial'), …);

Here we obtain the next identifier by using the sequence "seq_invoice_serial", that will not generate the locks/blocks observed before.

The problem arrives when application logic is not using a standard number for those identifiers, so "sequences" approach seams to not work properly, by example, imagine an application component (billing) that create their billing identifiers by this formula:

"AYYYYMMS9999999", where:

- A=Fixed character

- YYYY=Year

- MM=Month

- S=Fixed character

- 9999999=Sequential number reset to 1 on each new Month

So, we could have this identifiers:

A202010S0001234, A202010S0001235, A202011S0000001, A202011S0000001, …

This special identifiers doesn´t match exactly with the database "sequences" approach. With sequences we can control the increment (increment by 1,2…), the minvalue, the maxvalue, if cycling values, but we can´t have a sequential string or reset based in a timeframe, here you have the sequence create command:

CREATE SEQUENCE [ IF NOT EXISTS ] sequence_name [ AS { SMALLINT | INT | BIGINT } ] [ INCREMENT [ BY ] increment ] [ MINVALUE minvalue | NO MINVALUE ] [ MAXVALUE maxvalue | NO MAXVALUE ] [ START [ WITH ] start ] [ CACHE cache ] [ [ NO ] CYCLE ] [ OWNED BY { table_name.column_name | NONE } ]

In the following example we will illustrate and measure how could we remove locking problems in this scenario by still using the "sequence" approach.

Original billing sequential numbering

Here we´ll show a generic billing sequential number process:

Test datamodel

create table serials (type varchar(100), sequence bigint, yearmonth varchar(6), lastvalue varchar(20));

create index serials_01_I on serials(type);

insert into serials values ('invoice',0,'','');

create table invoices (code varchar(20), fx_datetime timestamp);

Function to get next identifier

create function get_next_invoice()

returns varchar as $$

declare

vlastval varchar:='';

begin

update serials set

sequence=(case when to_char(now(),'YYYYMM')=yearmonth then sequence+1

else 1

end),

yearmonth=(case when to_char(now(),'YYYYMM')=yearmonth then yearmonth

else to_char(now(),'YYYYMM')

end),

lastvalue=(case when to_char(now(),'YYYYMM')=yearmonth then 'A'||to_char(now(),'YYYYMM')||LPAD((sequence+1)::varchar,7,'0')

else 'A'||to_char(now(),'YYYYMM')||'0000001'

end)

where type='invoice'

returning lastvalue into vlastval;

return vlastval;

end;

$$ language plpgsql;

Here we use the serials table to update always the same row (to guarantee uniqueness and atomically increase identifiers), checking if we are in a new month, we set the sequence to 1 and generating the lastvalue as a string as the application request "AYYYYMMS9999999"

Example function usage

insert into invoices select get_next_invoice(), current_timestamp;

Benchmark

To create a benchmark, we will create a file: "get_invoice.sql" with the only command:

insert into invoices select get_next_invoice(), current_timestamp;

And will execute the pgbench command (https://www.postgresql.org/docs/10/pgbench.html:(

pgbench -h <servername>.postgres.database.azure.com -p 5432 -U frpardil@<servername> --protocol=prepared -P 1 --time=20 --client=30 -r --jobs=6 test1 -f get_invoice.sql

- -r => to show per statement latencies

- -P 1 => to show metrics each 1 second

- --time=20 => 20 seconds of test

- --client=30 => 30 different sessions

- --jobs=6 => 6 jobs managing the client sessions

Test server is an Azure Database for PostgreSQL server, Tier: General Purpose, version:10, 4vCores, public IP access, (https://docs.microsoft.com/en-us/azure/postgresql/concepts-pricing-tiers)

pgbench -h <servername>.postgres.database.azure.com -p 5432 -U frpardil@<servername> --protocol=prepared -P 1 --time=20 --client=30 -r --jobs=6 test1 -f get_invoice.sql

starting vacuum...ERROR: relation "pgbench_branches" does not exist

(ignoring this error and continuing anyway)

ERROR: relation "pgbench_tellers" does not exist

(ignoring this error and continuing anyway)

ERROR: relation "pgbench_history" does not exist

(ignoring this error and continuing anyway)

end.

progress: 3.1 s, 29.8 tps, lat 75.596 ms stddev 44.555

progress: 4.0 s, 345.6 tps, lat 86.734 ms stddev 44.257

progress: 5.0 s, 284.0 tps, lat 106.031 ms stddev 74.786

progress: 6.0 s, 321.0 tps, lat 90.318 ms stddev 64.367

progress: 7.0 s, 265.0 tps, lat 117.689 ms stddev 137.011

progress: 8.0 s, 308.0 tps, lat 96.085 ms stddev 63.510

progress: 9.0 s, 247.0 tps, lat 117.740 ms stddev 130.024

progress: 10.0 s, 331.0 tps, lat 91.790 ms stddev 104.197

progress: 11.0 s, 276.0 tps, lat 108.908 ms stddev 121.539

progress: 12.0 s, 269.0 tps, lat 109.838 ms stddev 107.313

progress: 13.0 s, 303.0 tps, lat 99.651 ms stddev 84.757

progress: 14.0 s, 300.0 tps, lat 93.716 ms stddev 77.808

progress: 15.0 s, 295.0 tps, lat 106.247 ms stddev 136.072

progress: 16.0 s, 305.0 tps, lat 94.900 ms stddev 88.672

progress: 17.0 s, 272.0 tps, lat 108.254 ms stddev 103.558

progress: 18.0 s, 224.0 tps, lat 141.480 ms stddev 262.932

progress: 19.0 s, 293.0 tps, lat 106.081 ms stddev 131.669

progress: 20.0 s, 292.0 tps, lat 96.766 ms stddev 87.897

transaction type: get_invoice.sql

scaling factor: 1

query mode: prepared

number of clients: 30

number of threads: 6

duration: 20 s

number of transactions actually processed: 5032

latency average = 103.273 ms

latency stddev = 115.041 ms

tps = 245.795076 (including connections establishing)

tps = 252.400446 (excluding connections establishing)

statement latencies in milliseconds:

103.440 insert into invoices select get_next_invoice(), current_timestamp;

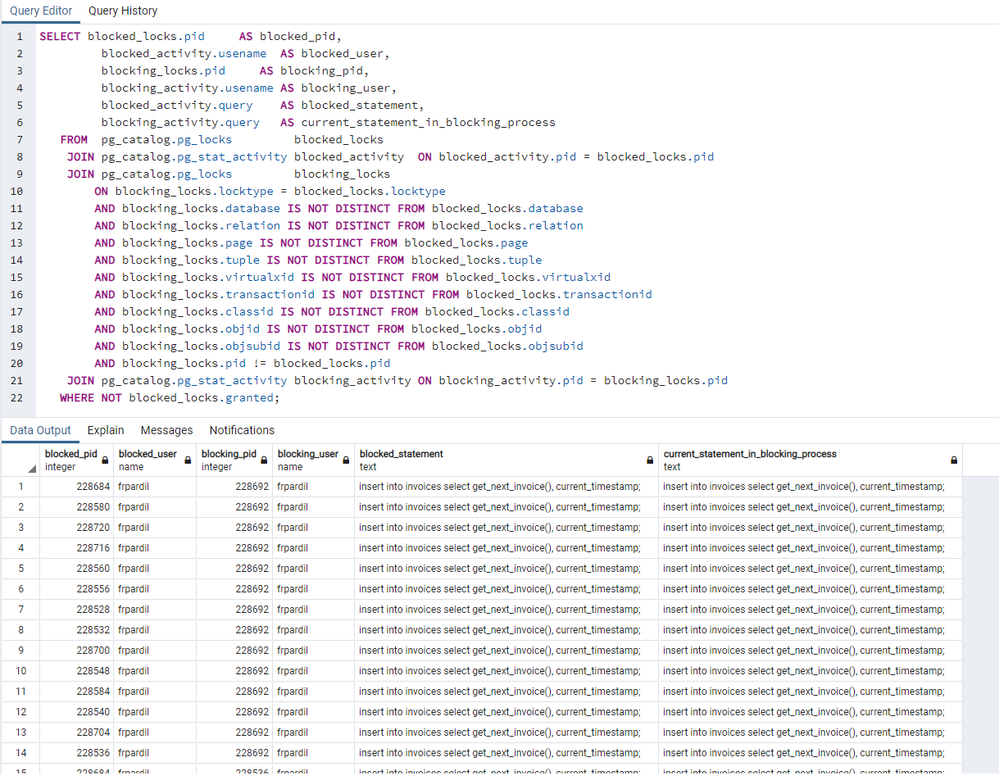

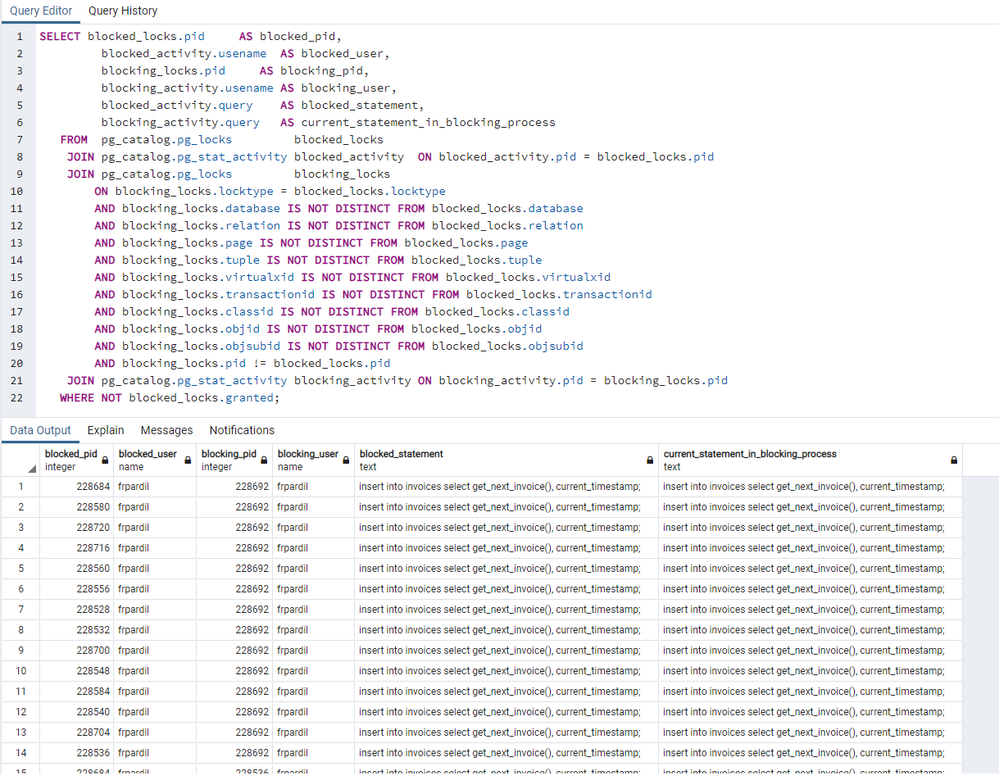

Locks when pgbench is executing

(from https://wiki.postgresql.org/wiki/Lock_Monitoring)

We observe multiple blocked sessions during all the test execution.

In the test results, we see up to 252 Transactions Per Second (tps), a total of 5032 executions, so, in 20 seconds we correctly registered 5032 invoices.

Improved atomic billing sequential numbering

Now, we are going to generate a similar optimized function to improve these numbers and remove locks:

Create sequence

CREATE SEQUENCE IF NOT EXISTS seq_invoice_serial AS BIGINT INCREMENT BY 1 MINVALUE 1 NO MAXVALUE START WITH 1 NO CYCLE OWNED BY NONE;

Function to get next identifier

create or replace function get_next_invoice_improved()

returns varchar as $$

declare

vlastval varchar;

begin

update serials set

yearmonth=to_char(now(),'YYYYMM'),

sequence=(select setval('seq_invoice_serial', 1)),

lastvalue='A'||to_char(now(),'YYYYMM')||'0000001'

where type='invoice' and yearmonth<>to_char(now(),'YYYYMM')

returning lastvalue into vlastval;

if vlastval is null then

select 'A'||to_char(now(),'YYYYMM')||LPAD((nextval('seq_invoice_serial'))::varchar,7,'0') into vlastval;

end if;

return vlastval;

end;

$$ language plpgsql;

Here we use the serials table to update the same row, only when we are in a different month that the already saved, so, only 1 single update will take place every month, in this update we also reset the sequence "seq_invoice_serial" to 1, so we start again for each changed month, but will use the normal sequence to generate the application required format string with a simple select over the sequence: select 'A'||to_char(now(),'YYYYMM')||LPAD((nextval('seq_invoice_serial'))::varchar,7,'0') into vlastval;

Benchmark

To create a benchmark, we will create a file: "get_invoice_improved.sql" with the only command:

insert into invoices select get_next_invoice_improved(), current_timestamp;

In the same server, we execute the same pgbench command using the new get_invoice_improved.sql file:

pgbench -h <servername>.postgres.database.azure.com -p 5432 -U frpardil@<servername> --protocol=prepared -P 1 --time=20 --client=30 -r --jobs=6 test1 -f get_invoice_improved.sql

progress: 3.5 s, 39.9 tps, lat 63.407 ms stddev 42.206

progress: 4.0 s, 633.8 tps, lat 48.170 ms stddev 13.816

progress: 5.0 s, 589.9 tps, lat 50.579 ms stddev 12.461

progress: 6.0 s, 638.1 tps, lat 47.403 ms stddev 2.199

progress: 7.0 s, 649.0 tps, lat 46.602 ms stddev 1.840

progress: 8.0 s, 594.0 tps, lat 50.016 ms stddev 13.926

progress: 9.0 s, 617.0 tps, lat 48.574 ms stddev 4.460

progress: 10.0 s, 593.0 tps, lat 51.081 ms stddev 12.930

progress: 11.0 s, 635.0 tps, lat 46.966 ms stddev 1.955

progress: 12.0 s, 566.9 tps, lat 53.043 ms stddev 24.858

progress: 13.0 s, 574.1 tps, lat 52.268 ms stddev 16.469

progress: 14.0 s, 631.0 tps, lat 47.366 ms stddev 1.824

progress: 15.0 s, 549.0 tps, lat 54.685 ms stddev 29.363

progress: 16.0 s, 634.0 tps, lat 47.730 ms stddev 3.021

progress: 17.0 s, 619.0 tps, lat 48.068 ms stddev 2.890

progress: 18.0 s, 636.0 tps, lat 47.068 ms stddev 2.197

progress: 19.0 s, 596.0 tps, lat 50.293 ms stddev 12.967

progress: 20.0 s, 644.0 tps, lat 46.581 ms stddev 1.508

transaction type: get_invoice_improved.sql

scaling factor: 1

query mode: prepared

number of clients: 30

number of threads: 6

duration: 20 s

number of transactions actually processed: 10260

latency average = 49.356 ms

latency stddev = 13.588 ms

tps = 507.757804 (including connections establishing)

tps = 523.664725 (excluding connections establishing)

statement latencies in milliseconds:

49.840 insert into invoices select get_next_invoice_improved(), current_timestamp;

Locks when pgbench is executing

We don´t observe any session being blocked during all the test execution.

In the test result, we see up to 523 Transactions Per Second (tps), a total of 10260 executions, so, in 20 seconds we correctly registered 10260 invoices, double number of invoices than old locking method.

So, even it seams a little complex, we can remove the locking problem when using user defined logic for monolithically increase identifiers by still using the sequential approach, with some code.